This commentary is the fourth in a series explaining data center electricity use and the nuances in regulating it. You can read early commentaries here, here, and here.

Large-scale, dynamic social and economic change is often more difficult, incremental, and slower than anticipated. Consider James Watt and Matthew Boulton in Birmingham in 1776, having invented and refined the double-acting steam engine. Watt patented the invention that year, a breakthrough that would ultimately become a hallmark of the British Industrial Revolution and propel its global spread. Yet it wasn’t until the 1840s that their innovation truly transformed industry.

In 1776, the energy efficiency of Boulton & Watt’s steam engine was a mere 4%, converting only 100 BTUs (British thermal units) of coal into 4 BTUs of useful work, primarily in pumping water out of tin mines in Cornwall. The rest was waste heat.

Despite this low efficiency, the engine produced enough work relative to the horses and humans it displaced to attract willing buyers. These buyers, in turn, helped Boulton and Watt refine their design, reduce fuel waste, and enhance performance. Competing against water wheels—another technology that was improving, but facing diminishing returns and location limitations—the steam engine took nearly 50 years of incremental improvements to surpass its nearest competitors.

Boulton and Watt also suffered from input limitations, usually iron and coal quality and consistency of supply. Supply chain challenges made them figure out how to do more with less.

This economic history parable—one of my favorite stories—offers insights into the challenges facing data center owners and hyperscalers today: managing inputs, efficiency, and waste. One challenge is balancing their electricity demand (input) with their greenhouse gas emissions (waste), for which they have set corporate targets. Another parallel is the inelasticity of input supplies; increasing supply takes time, making change gradual and iterative until it eventually becomes exponential.

Data centers are incredibly energy-intensive, with their global electricity consumption expected to rise significantly in the coming years. In 2022, data centers consumed approximately 200 terawatt-hours (TWh), and this figure is projected to increase to around 260 TWh by 2026. This growth is driven by the exponential increase in digital services, artificial intelligence (AI) workloads, and cloud computing. In the U.S., data centers accounted for about 2.5% of total electricity consumption in 2022, a share that could triple by 2030 (S&P Global March 2024).

In the first three posts in this series, I developed those economic themes and suggested ways hyperscalers could manage this balance by building their own onsite generation.

The rising electricity demand from data centers is putting substantial pressure on power grids, especially in regions where the energy infrastructure is not equipped to handle such rapid increases. For instance, areas like Northern Virginia, a major hub for data centers, are experiencing challenges in power availability, which is driving developers to explore secondary and tertiary markets with better power access.

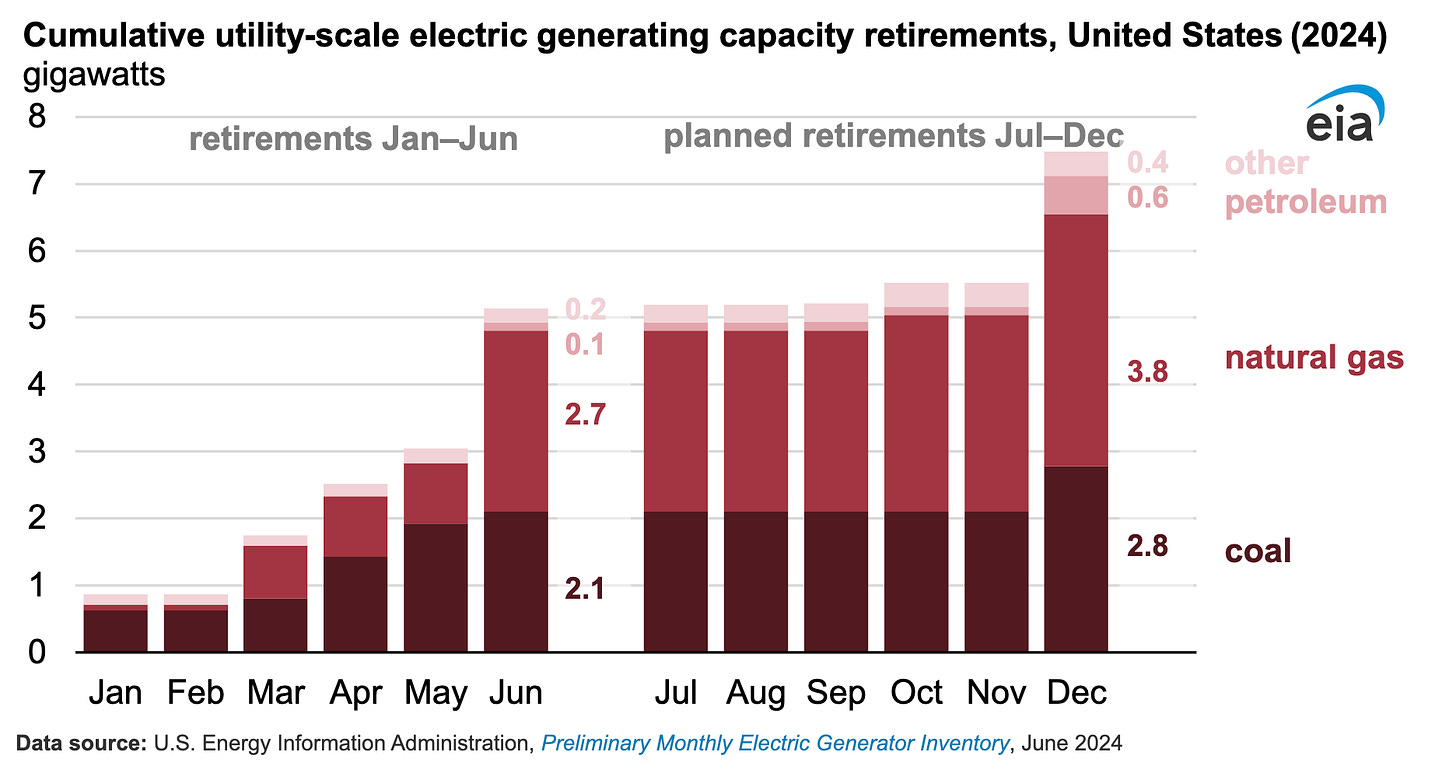

This demand surge has increased reliance on fossil fuel plants, including those previously slated for retirement, thereby complicating efforts to decarbonize the energy grid. Utilities and hyperscalers are both caught between the need to meet the growing energy demands of data centers and the push to reduce carbon emissions. This has led to complex tradeoffs, such as delaying the retirement of coal plants or increasing the use of natural gas, which poses challenges to achieving long-term sustainability goals while it helps them in the short run by displacing coal. Some of those planned retirements are likely to be delayed.

While tech companies are investing in renewable energy and efficiency improvements, the rapid expansion of data centers has still led to a notable increase in greenhouse gas (GHG) emissions (The Wall Street Journal, July 3, 2024).

Globally, the emissions from data centers have been relatively modest compared to the surge in digital workloads, thanks to improvements in energy efficiency. The cumulative effect is still significant, contributing to the strain on clean energy initiatives. For example, in Ireland, data center electricity use has tripled since 2015, representing a substantial portion of national energy demand (International Energy Agency).

In July 2024, Google reported that its greenhouse gas emissions were 48% higher than in 2019 and attributed the increase to AI and data center activity (S&P Global July 2024). Google has set ambitious targets to achieve net-zero emissions across all of its operations and value chain by 2030. To reach this goal, the company is focused on 24/7 carbon-free energy (including hourly matching of demand and supply, a topic for another analysis), renewable energy procurement, and other forms of supply chain decarbonization.

One example is their recent work with Fervo Energy, an innovative developer of enhanced geothermal using production techniques developed in oil and gas hydraulic fracturing and horizontal drilling, and their negotiations with the Nevada utility to develop a specific Clean Transition Tariff there to apply to the Fervo contract (see also this Trellis article on the more general tech interest in nuclear and geothermal).

All of this technological, commercial and strategic innovation takes a lot of time in complex systems like building steam engines in 1776 or redesigning energy-data systems today. Google has come under criticism for these higher emissions. I disagree with, for example, Katie Collins at CNET when she says if “… Google fails to honor its environmental commitments, it will be making a clear statement about how seriously it values profit versus the planet…If we are to take Google seriously, that number should be going down, not up.”

The hackneyed “profit versus planet” framing is naive and shows a shallow understanding of Google’s strategy and how it perceives its market. But she and other critics are correct in pointing out that there’s a tradeoff here that has to be managed.

The growth of data centers is a double-edged sword for clean energy initiatives. Data centers and the growth of AI have the potential to create the kind of orders-of-magnitude improvement in productivity and living standards that the steam engine ultimately created after its five-decade maturation (and yes, I’m glossing over the potential costs of AI, I acknowledge that but will leave that Pandora’s Box closed for now). Those productivity improvements include things like enabling researchers at PNNL to discover 18 new battery materials in two weeks, a research process that would otherwise take years. AI’s productivity boost as a research tool will be vital for improving energy efficiency, reducing costs, and enabling us to get more from less to reduce waste, again hearkening back to Boulton & Watt.

On the other hand, their substantial energy demands and associated emissions pose significant challenges to global decarbonization efforts. Addressing these issues should involve enhancing energy efficiency. The Economist’s Technology Quarterly in Jan. 2024 catalogued the energy efficiency improvements in data centers:

Despite all this the internet’s use of electricity has been notably efficient. According to the IEA, between 2015 and 2022 the number of internet users increased by 78%, global internet traffic by 600% and data-centre workloads by 340%. But energy consumed by those data centres rose by only 20-70%.

Such improved efficiency comes partly from improved thriftiness in computation. For decades the energy required to do the same amount of computation has fallen by half every two-and-a-half years, a trend known as Koomey’s law. And efficiencies have come from data centres as they have grown in size, with increasingly greater shares of their energy use going to computation.

Taking advantage of economies of scale in data center size helps efficiency, although such scale has diminishing returns. Recent improvements in low-carbon concrete reduce the carbon intensity of the building itself (and it’s a really cool innovation!).

One significant way to reduce greenhouse gas emissions and improve energy efficiency is through the adoption of liquid cooling systems in data centers. Unlike traditional air cooling, liquid cooling employs fluids—typically water or a dielectric liquid—to absorb and dissipate heat directly from high-performance components like central processing units (CPU) and graphics processing units (GPU), which generate substantial heat during operation.

Several types of liquid cooling systems are available, including direct-to-chip cooling, where coolant circulates through cold plates attached directly to processors, and immersion cooling, where servers are fully or partially submerged in a dielectric fluid that circulates to remove heat. These methods surpass traditional air cooling in efficiency because liquids have a higher heat capacity and thermal conductivity, allowing for more effective heat transfer and thermal management.

For instance, Nautilus Data Technologies uses a water cooling system where cold water circulates to extract heat from servers. The warm water generated can then be repurposed for other onsite applications. This system is water-efficient and boasts a Power Usage Effectiveness (PUE) of 1.15 or lower, demonstrating high efficiency compared to conventional data center cooling designs.

Liquid cooling supports higher power densities, an increasingly crucial factor as data centers evolve to manage more computationally intensive tasks like AI and big data analytics. Enhanced thermal management through liquid cooling enables closer hardware packing, potentially reducing the data center’s physical footprint. These systems can also diminish or even eliminate the need for extensive air conditioning and ductwork, leading to lower operational costs and a reduced environmental impact, particularly in terms of water and energy consumption.

Liquid cooling does have challenges and costs. The initial capital investment for implementing liquid cooling systems is generally higher than for traditional air cooling, due to the specialized equipment and infrastructure required. Maintenance is more complex as well, necessitating specific expertise to manage the liquid systems and prevent leaks or other issues that could jeopardize data center operations. Retrofitting existing data centers with liquid cooling can be especially expensive and technically challenging, which may limit its adoption to new constructions or specific high-performance computing environments—though there are numerous such new projects underway.

Much like Boulton and Watt in their time, modern data center companies pursue commercial objectives with strategies to implement them but face tradeoffs and complexities that often delay their achievement. The challenges of managing inputs and waste are neither new nor unique. The importance of efficiency and innovation in addressing these challenges and achieving objectives remains vital, even if it takes longer than initially anticipated.

A version of this commentary was first published at Substack in the newsletter Knowledge Problem.